Intro

Discover the 5 key differences, highlighting crucial distinctions, comparisons, and contrasts, to make informed decisions with expert analysis and insights.

The world of technology and innovation is constantly evolving, and with it, various terms and concepts emerge, sometimes causing confusion among users. Understanding the differences between various technological advancements is crucial for making informed decisions and staying up-to-date with the latest trends. In this article, we will delve into the 5 key differences between various technological concepts, exploring their definitions, applications, and implications.

The importance of recognizing these differences lies in their potential impact on our daily lives, from how we communicate and access information to how businesses operate and innovate. By grasping these distinctions, individuals can better navigate the complex landscape of technology, making more informed choices about the tools and services they use. Moreover, businesses can leverage this understanding to develop strategies that capitalize on the unique benefits of each technology, driving growth and competitiveness.

As we embark on this journey to explore the 5 key differences, it's essential to consider the broader context of technological innovation. The rapid pace of advancement in fields like artificial intelligence, blockchain, and the Internet of Things (IoT) has led to the emergence of new concepts and terms, some of which may seem interchangeable but hold significant differences. By dedicating time to understand these nuances, readers can enhance their technological literacy, empowering themselves to engage more effectively with the digital world.

Introduction to Key Technological Concepts

To begin our exploration, let's introduce the concepts that will be at the forefront of our discussion. These include cloud computing, edge computing, artificial intelligence (AI), machine learning (ML), and the Internet of Things (IoT). Each of these technologies has been transformative in its own right, offering solutions to various challenges faced by individuals and organizations. However, their applications, benefits, and operational mechanisms differ significantly, highlighting the need for a clear understanding of their distinctions.

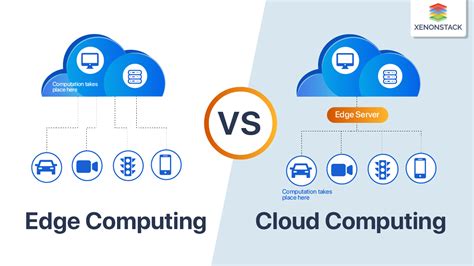

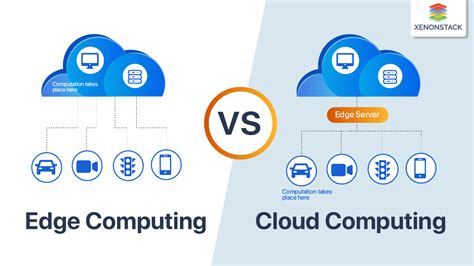

Cloud Computing vs. Edge Computing

One of the primary distinctions in the technological landscape is between cloud computing and edge computing. Cloud computing refers to the delivery of computing services over the internet, where resources such as servers, storage, databases, software, and applications are provided as a service to users on-demand. This model has revolutionized the way businesses operate, offering scalability, flexibility, and cost savings. On the other hand, edge computing is a distributed computing paradigm that brings computation and data storage closer to the location where it is needed, reducing latency and bandwidth usage in applications such as IoT, augmented reality, and content delivery.

Benefits of Cloud and Edge Computing

The benefits of cloud computing include enhanced collaboration, improved scalability, and reduced maintenance costs. Edge computing, meanwhile, offers real-time processing, reduced latency, and enhanced security by minimizing the amount of data that needs to be transmitted to the cloud or a central data center.Artificial Intelligence (AI) and Machine Learning (ML)

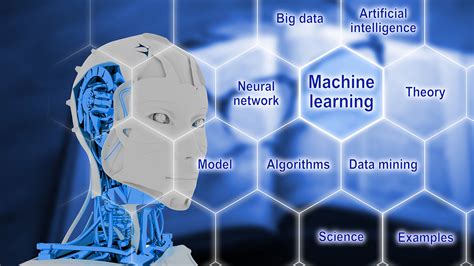

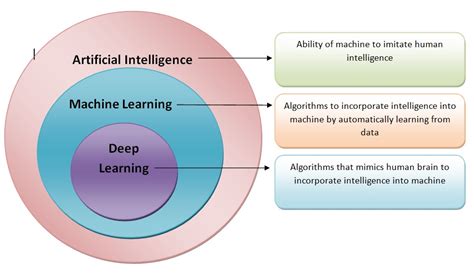

Artificial intelligence (AI) and machine learning (ML) are often used interchangeably, but they represent different concepts within the broader field of computer science. AI refers to the development of computer systems that can perform tasks that typically require human intelligence, such as visual perception, speech recognition, decision-making, and language translation. ML, a subset of AI, involves the use of algorithms that enable machines to learn from data and improve their performance on a specific task without being explicitly programmed.

Applications of AI and ML

The applications of AI and ML are vast and diverse, ranging from virtual assistants and image recognition systems to predictive analytics and natural language processing. These technologies have the potential to automate routine tasks, enhance customer experiences, and drive business innovation.Internet of Things (IoT)

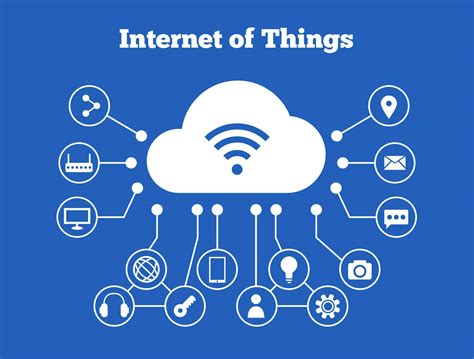

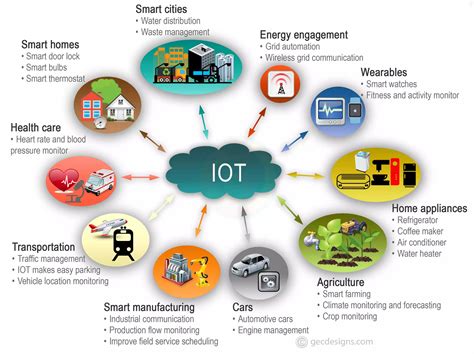

The Internet of Things (IoT) refers to the network of physical devices, vehicles, home appliances, and other items that are embedded with sensors, software, and connectivity, allowing them to collect and exchange data. IoT has transformed the way we live and work, enabling smart homes, cities, and industries through real-time monitoring, automation, and data-driven insights.

IoT Applications and Challenges

IoT applications span across various sectors, including healthcare, transportation, and manufacturing, offering benefits such as improved efficiency, enhanced safety, and reduced costs. However, IoT also presents challenges related to security, privacy, and interoperability, which must be addressed to fully realize its potential.Conclusion and Future Directions

In conclusion, understanding the 5 key differences between cloud computing, edge computing, AI, ML, and IoT is essential for navigating the complex and evolving technological landscape. Each of these technologies offers unique benefits and applications, and recognizing their distinctions can empower individuals and businesses to make informed decisions and capitalize on their potential. As technology continues to advance, it's crucial to stay informed about the latest developments and trends, fostering a culture of innovation and digital literacy.

Final Thoughts

As we reflect on the 5 key differences, it's clear that the future of technology holds immense promise and opportunity. By embracing these advancements and understanding their nuances, we can unlock new possibilities for growth, innovation, and progress. Whether you're a tech enthusiast, a business leader, or simply a curious individual, the world of technology has something to offer, and staying informed is the first step towards harnessing its potential.

Technological Innovations Image Gallery

What is the primary difference between cloud computing and edge computing?

+The primary difference lies in where the data processing occurs. Cloud computing processes data in a centralized location (the cloud), while edge computing processes data at the edge of the network, closer to the source of the data.

How does AI differ from ML?

+AI refers to the broader field of developing intelligent machines, while ML is a subset of AI that specifically focuses on algorithms that enable machines to learn from data and improve their performance without being explicitly programmed.

What are some common applications of IoT?

+IoT has applications in smart homes, cities, industries, healthcare, transportation, and manufacturing, among others, enabling real-time monitoring, automation, and data-driven insights.

We invite you to share your thoughts and experiences with these technological innovations. How do you see cloud computing, edge computing, AI, ML, and IoT impacting your life and business? Share this article with your network to spark a conversation about the future of technology and its endless possibilities. Together, let's explore and embrace the exciting world of technological advancements.